Now for the exciting conclusion on how to isolate your vSphere Replication and NFC traffic! (for Part 1 click here)

Note: There is a requirement to modify the vSphere Replication appliances directly to configure the static networks on each new vnic. Making these changes requires modification of config files on the appliance, which is not officially supported so do this at your own risk!

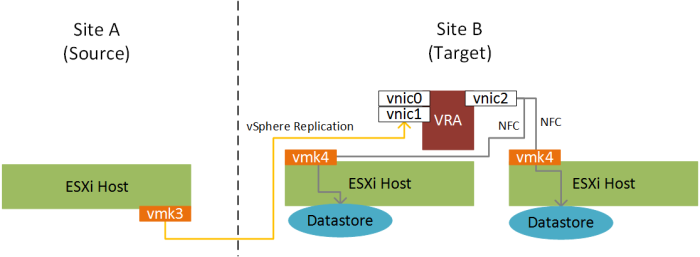

First, an overview of my lab environment:

In my lab I have 2 x vSphere Replication 6.1 appliances, vr-01b and vr-01c, with centos01 being a simple CentOS 7 VM which will be used to test the replication. Also note that in my test setup I am using VDS’s, however the below process should still work if using standard switches.

Note: Before starting, make sure any existing replication jobs have been stopped, as performing the below will interrupt any replication you currently have going!

Here is an overview of what needs to be done:

- Configure the vSphere Replication and NFC dvportgroups

- Configure VMkernel adapters for vSphere Replication and NFC traffic

- Configure VR and NFC ESXi static routes

- Create vnics on VR Appliances for vSphere Replication and NFC traffic

- Set IP Address for incoming storage traffic in VAMI

1. Configure the vSphere Replication and NFC dvportgroups

On your VDS, configure your dvportgroup’s for the outbound vSphere Replication traffic (along with applicable VLAN) and inbound NFC traffic (along with its applicable VLAN). On my setup this looks like the below:

- Datacenter “ONE” (Site A)

- bmanonelab_one-VDS01

- vr_one-vds01: vSphere Replication

- Active Uplinks: Uplink 1

- Standby Uplinks: Uplink 2

- Unused Uplinks: Uplink 3, Uplink 4

- vrnfc_one-vds01: vSphere Replication NFC

- Active Uplinks: Uplink 2

- Standby Uplinks: Uplink 1

- Unused Uplinks: Uplink 3, Uplink 4

- vr_one-vds01: vSphere Replication

- bmanonelab_one-VDS01

- Datacenter “TWO” (Site B)

- bmanonelab_two-VDS01

- vr_one-vds01: vSphere Replication

- Active Uplinks: Uplink 1

- Standby Uplinks: Uplink 2

- Unused Uplinks: Uplink 3, Uplink 4

- vrnfc_one-vds01: vSphere Replication NFC

- Active Uplinks: Uplink 2

- Standby Uplinks: Uplink 1

- Unused Uplinks: Uplink 3, Uplink 4

- vr_one-vds01: vSphere Replication

- bmanonelab_two-VDS01

Note: For my dvportgroups above I have segregated the vSphere Replication and NFC traffic to a single uplink on the VDS each (i.e. Uplink 1 for VR and Uplink 2 for NFC). I don’t believe this is necessary to get this to work however if you do have mutiple active uplinks for each of your VR/NFC dvportgroups, obviously make sure the corresponding vmnics have the VLAN for those networks trunked accordingly.

2. Configure VMkernel Adapters for vSphere Replication and NFC traffic

Now that the dvportgroups have been created and thier uplinks assigned, we need to create the vmkernel adapters on each of the hosts for both vSphere Replication and NFC traffic:

- On your distributed switch, go to Add and Manage Hosts -> Manage host networking -> Attach your hosts (select template mode) -> Then manage VMkernel adapters (template mode)

- In the top pane, click New adapter.

- Under select target device and select existing network, choose the dvportgroup created earlier for vSphere Replication traffic (e.g. vr_one-vds01) then Next

- Under Available services, select vSphere Replication traffic, then click Next

- Under Ipv4 settings, set the IP details for the vSphere Replication vmkernel adapter then click Finish

- Repeat steps 2 – 5 above for the vSphere Replication NFC traffic.

- Once the two new vmkernel adapters have been created for your template host, apply to all other hosts then click Next

- Under Analyze impact click Next then click Finish.

You should now have two new vmkernel adapters for vSphere Replication traffic and NFC traffic on your hosts:

3. Configure VR and NFC ESXi static routes

Now that we have configured the VMkernel interfaces for VR and NFC traffic, we have to configure static routes on the ESXi hosts to ensure each type of traffic is directed via the correct gateway for that NIC (as the default gateway is currently that of the management interface). The following will need to be performed on each ESXi host:

- SSH to the ESXi host as root

- Run the following command to set the static route for the vSphere Replication and NFC networks:

Site A – vSphere Replication

esxcli network ip route ipv4 add --gateway 192.168.140.1 --network 192.168.240.0/24

Site A – NFC: Not required in my lab as the NFC interface on the appliance is in the same subnet as the ESXi NFC vmk interfaces. If your VRMS NFC interface and ESXi NFC VMK interfaces were on seperate networks, then you would need to configure a static route accordingly.

Site B – vSphere Replication

esxcli network ip route ipv4 add --gateway 192.168.240.1 --network 192.168.140.0/24

Site B – NFC: Not required in my lab as the NFC interface on the appliance is in the same subnet as the ESXi NFC vmk interfaces. If your VRMS NFC interface and ESXi NFC VMK interfaces were on seperate networks, then you would need to configure a static route accordingly.

Don’t forget to do this on all of your hosts!

4. Create vnics on VR Appliances for vSphere Replication and NFC traffic

Now we need to create the new virtual NICs on the vSphere Replication appliances in each of the sites. As per diagram a) above, in my lab this will be vnic1 and vnic2 (with vnic0 kept for management traffic):

Note: You may get a warning when attempting to edit the appliances that they are managed by “VR Management”. Just ignore this.

- Power-off the vSphere Replication instances in each site (e.g. vr-01b and vr-01c)

- Create a snapshot of your appliances…just in case something goes wrong 😉

- Add two new vnics:

- The first new vnic (vmxnet3) is for vSphere Replication traffic, and will be attached to the “VR” dvportgroup. E.g. in my lab this is vr_one-vds01

- The second vnic (also vmxnet3) is for NFC traffic, and will be attached to the “NFC” dvportgroup. E.g. in my lab this is vrnfc_one-vds01

- Leave vnic0 as it is (i.e. management).

- The VR Appliance (e.g. vr-01b) network config would now look like the following:

1. Now power on the appliance, and configure the new network adapters:

1. Now power on the appliance, and configure the new network adapters:

- SSH to the vSphere Replication appliance and login as root

- Open /etc/sysconfig/network/ifcfg-eth1 with your favourite editor (e.g. vi) and configure with the VR IP for that interface. E.g. in my lab:

DEVICE=eth1 BOOTPROTO='static' STARTMODE='auto' TYPE=Ethernet USERCONTROL='no' IPADDR='192.168.140.40' NETMASK='255.255.255.0' BROADCAST='192.168.140.255'

3. Do the same for eth2. E.g for vr-01b:

DEVICE=eth2 BOOTPROTO='static' STARTMODE='auto' TYPE=Ethernet USERCONTROL='no' IPADDR='192.168.150.40' NETMASK='255.255.255.0' BROADCAST='192.168.150.255'

4. At this point you will also need to set the default gateway by creating the file /etc/sysconfig/network/routes and add the following, e.g:

vr-01b:

default 192.168.120.1

vr-01c:

default 192.168.220.1

Now restart the network:

service network restart

5. Configure static routing for the vSphere Replication traffic by creating an ifroute-eth1 and ifroute-eth2 file under /etc/sysconfig/network. This is required as otherwise VR and NFC traffic will attempt to exit via the management interface (vnic0). The contents of ifroute-<interface> will be:

<NETWORK> <GATEWAY> <MASK> <INTERFACE>

For example in my lab this would be:

- vr-01b (ifroute-eth1):

192.168.140.0 192.168.140.1 24 eth1 192.168.240.0 192.168.140.1 24 eth1

- vr-01b (ifroute-eth2):

192.168.150.0 192.168.150.1 24 eth2

- vr-01c (ifroute-eth1):

192.168.240.0 192.168.240.1 24 eth1 192.168.140.0 192.168.240.1 24 eth1

- vr-01c (ifroute-eth2):

192.168.250.0 192.168.250.1 24 eth2

5. Set IP Address for incoming storage traffic in VAMI

Finally you will need to connect to the VAMI of each appliance (https://<appliance-mgmt>:5480) and login as root and then under VR -> Configuration set the “IP Address for incoming Storage Traffic” to the IP that has been assigned on the appliance for VR traffic. E.g. for my lab:

- vr-01b: 192.168.140.40

- vr-01c: 192.168.240.40

And that’s it! Resume/Configure replication for your VMs and make sure the replication starts successfully. If that does not work, check to make sure your static routes on your ESXi hosts and VR appliance are correct. I found in my testing that was the main reason for the replication to not start.

Thanks for the info…

What benefits do you see in separating the inbound replication Traffic NIC (vnic1) from the NFC NIC to VMKernel port (vnic2) traffic? Obviously we have inbound and outbound traffic so with “Full Duplex” configured in most cases now, could you not share the function on the same NIC? (If on the same network?) Simplify with 1 x VMKernel for both Replication & NFC traffic?

LikeLike

The main benefit would be security isolation, E.g. a dedicated vlan for VR traffic and another for NFC, but that depends on how strict the security requirements are in your environment. Yes you could share the same function on the same vnic, you would just have to ensure the static route to the local (to the VR appliance) site NFC vmk ports is via that same vnic.

LikeLike

Great write-up! My source and destination replication networks are on the same L2, so no need for static routes. Worked like a charm.

LikeLike

Have tried this on ESXi 6.5 Update 1 and the command: esxcli network ip route ipv4 add –gateway 192.168.140.1 –network 192.168.240.0/24

Does not work as there is already a route created for this by default with Gateway set as *, when trying to remove this route that also fails as details its created automatically for the corresponding VMK for vREP.

VMware have advised that as the Networking between Datacentres is Layer 2 stretched VLAN, there is no need to configure routes, but i am not convinced about this.

Also reference this article, by default there is only one gateway on the vRA Appliances so is this something else you believe needs to be done to completely segregate?

https://www.starwindsoftware.com/blog/vsphere-replication-traffic-isolation

LikeLike